Post Webhooks of AWS SNS Messages with this Docker Microservice

Sometimes I wish AWS was simpler, more plug and play. I love all the flexibility, but sometimes to accomplish what I need to get done I have to use multiple AWS capabilities and spend a ton of time with IAM policies. At https://dronze.com we are making DevOps easier for this reason.

One of the things we like about the web, and its open nature is webhooks. Webhooks are intended to let apps to talk to each other with nothing more than the concept: “Thou shalt POST json when stuff happens”. Most cloud SaaS from DevOps like Travis and GitHub have simple ways to have notifications sent to an endpoint on the internet.

At Dronze we are trying to provide a way for the entire DevOps environment to notify the team, this requires making all of a team’s environment easy to send notifications of what is going on. To us that means webhooks. Sadly, not everyone is on board with natively supporting webhooks as a way to notify events, so sometimes it’s not as easy as it should be.

This article is a cookbook on how to build a Docker microservice to syndicate your SNS topic to a webhook endpoint.

Just Give It To Me!

- This source code to entire project is available on GitHub here.

- Pull and run a fully built docker container available on DockerHub here.

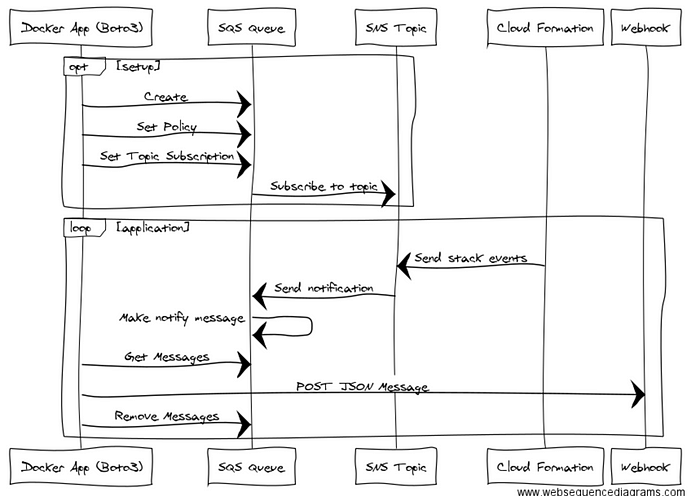

The Sequences

All anyone has to do is send stuff to a SNS topic and everything will be sent to our webhook. Easy right?

Nope. But that is not a big deal, let’s make a black box that simplifies the complexity so others don’t have to struggle like I did. So 5 primary actors are involved in this dance:

- Cloud Formation — The stack sends notifications to a SNS queue.

- The SNS Topic — Receives notifications during stack lifecycle.

- The SQS Queue-Converts the notifications to queued messages.

- The Boto3 Docker App — What this article is mostly about, it does all the heavy lifting of getting messages off the queue, removing them and sending them to the webhook.

- The Webhook — The destination.

Two primary sequences are required to make this easy for the end user:

- The Setup — Create the SQS queue if it doesn’t exist, setup the policy, and subscribe to notifications from the topic automatically.

- The Application-Loop and listen to messages, try to POST them and if successful delete sent messages from the SQS queue.

opt setup

Docker App (Boto3)->SQS Queue:Create

Docker App (Boto3)->SQS Queue:Set Policy

Docker App (Boto3)->SQS Queue:Set Topic Subscription

SQS Queue->SNS Topic: Subscribe to topic

endloop application

Cloud Formation->SNS Topic: Send stack events

SNS Topic->SQS Queue: Send notification

SQS Queue->SQS Queue: Make notify message

Docker App (Boto3)->SQS Queue:Get Messages

Docker App (Boto3)->Webhook:POST JSON Message

Docker App (Boto3)->SQS Queue:Remove Messages

end

Why SNS Topic Webhooks?

If we want to do DevOps in AWS we can use CloudFormation for managing stacks. Commonly a stack would be a combination of both network and instance topology to support a specific use case like running a performance test on a feature, or PEN testing.

Stacks have a lifecycle, as they are stood up or torn down they have a number of sub activities that we want visibility into:

So when we create that stack we want messages to tell us what is going on, but CloudFormation only lets you send notifications to AWS SNS (Simple Notification Service), this is a general push notification service so there is no mechanism to drive messages out of the system natively. In the “Advanced” settings of the stack creation we can set the topic.

So it’s possible to have the messages go to a topic, but how do they get to our website that can consume them? What we need is a queue, subscribed to the topic that can act as a loading dock for sending messages out to our webhook. So we are going to also need SQS.

Using Boto3 Clients for SNS and SQS

We will need clients for both the SNS (topics) and SQS (queues) services. These clients are used both in the setup of the queue and the application loop.

sns = boto3.client(

'sns',

config["AWS_REGION_NAME"],

aws_access_key_id=config["AWS_ACCESS_KEY_ID"],

aws_secret_access_key=config["AWS_SECRET_ACCESS_KEY"])sqs = boto3.client(

'sqs',

config["AWS_REGION_NAME"],

aws_access_key_id=config["AWS_ACCESS_KEY_ID"],

aws_secret_access_key=config["AWS_SECRET_ACCESS_KEY"])

Auto Subscribing to SNS Topics with SQS

If our queue is just responsible for consuming messages from our topic, shouldn’t it be possible to have our application automatically create the queue and subscribe to the topic? Yes. Let’s make things easier. To do this we use Boto3, a python based client library for AWS. This represents the first half of the responsibility of the Boto3 Docker App.

Creating a SQS Queue from a SNS Topic

# Creating a topic is idempotent, so if it already exists

# then we will just get the topic returned.

topic = sns.create_topic(Name=topic_name)# Creating a queue is idempotent also

queue_name = "{0}-q".format(topic_name)

topic_arn = topic['TopicArn']queue = sqs.create_queue(QueueName=queue_name)

Setting The Policy for the Queue

We need a policy that will allow the topic to send messages to the queue when notifications are sent to the topic. This is a Resource Policy, which is different than an IAM user policy.

queue_policy_statement = {

"Version": "2008-10-17",

"Id": "sns-publish-to-sqs",

"Statement": [{

"Sid": "auto-subscribe",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "SQS:SendMessage",

"Resource": "{queue_arn}",

"Condition": {

"StringLike": {

"aws:SourceArn": "{topic_arn}"

}

}

}]

}The application then uses this policy and sets the attribute for the queue to accept it:

q_attributes = sqs.get_queue_attributes(QueueUrl=queue['QueueUrl'], AttributeNames=['QueueArn'])queue_arn = q_attributes['Attributes']['QueueArn']# setup the statement

queue_policy_statement['Id'] = "sqs-policy-{0}".format(queue_name)

queue_policy_statement['Statement'][0]['Sid'] = "sqs-statement-{0}".format(queue_name)

queue_policy_statement['Statement'][0]['Resource'] = queue_arn

queue_policy_statement['Statement'][0]['Condition']['StringLike']['aws:SourceArn'] = topic_arn

queue_policy_statement_merged = json.dumps(queue_policy_statement, indent=4)

#print queue_policy_statement_merged

set_policy_response = sqs.set_queue_attributes(

QueueUrl=queue['QueueUrl'],

Attributes={

'Policy': queue_policy_statement_merged

}

)

Subscribing The SQS Queue

We now have a queue and it is ready to allow topic messages, the only thing left is to actually subscribe to the queue and listen to messages.

# Ensure that we are subscribed to the SNS topic

topic = sns.subscribe(TopicArn=topic_arn, Protocol='sqs', Endpoint=queue_arn)Ok. We are set to start getting messages, we are ready for our application to send messages.

The Message Consumer Application

Now we have a queue. We want an application loop to listen to that queue, consume messages, send them to our webhook, and then delete them from the queue once they have successfully been sent.

The Application Loop

The job of the application loop is to get messages from the queue and POST them to our endpoint. It brings it all together in an endless application loop, which is “the application”.

First we check the queue for messages and put them in a collection:

def check_queue(sqs, queue, poll_interval):

"""

Check the queue for completed files and set them to be

downloaded.

"""

messages_model = []

messages_response = sqs.receive_message(

QueueUrl=queue['QueueUrl'],

WaitTimeSeconds=poll_interval

)if "Messages" in messages_response:

for message in messages_response['Messages']:

messages_model.append({

"body":message['Body'],

"queue_url":queue['QueueUrl']

})

return messages_model

Then we need a message sender method that POST them over http:

def post_message(message, config):message_json = json.dumps(message)

logging.info("sending message: {0}".format(message_json))

req = urllib2.Request(config['POST_MESSAGE_ENDPOINT'])

req.add_header('Content-Type', 'application/json')try:

response = urllib2.urlopen(req, message_json)

logging.info("response: {0}".format(response.read()))

except urllib2.HTTPError as e:

logging.error("HTTPError code:{0} body:{1}".format(e.code, e.read()))return response

We bring it all together in an endless loop:

listening = True

while listening:

try:

messages_model = check_queue(sqs, queue, int(config['MESSAGE_LOOP_WAIT_SECS']))if len(messages_model) > 0:

logging.info("messages received: {0}".format(len(messages_model)))

for message in messages_model:

message['topic_arn'] = topic_arn

message['queue_arn'] = queue_arnpost_message(message, config)

logging.info("message sent.")except (KeyError, AttributeError, ValueError, NameError) as ke:

print("error: {0}".format(ke))

except KeyboardInterrupt:

listening = False

signal.signal(signal.SIGINT, handle_ctrl_c)

except:

logging.critical("Unexpected error: {0}".format(sys.exc_info()[0]))

Configuring Our Application

Ultimately we will building a docker instance for our microservice, but until that is revealed (see section below) we will need some basic properties to configure the application that we will run locally to use to test our service.

AWS_ACCESS_KEY_ID=[access_key]

AWS_SECRET_ACCESS_KEY=[secret]

AWS_REGION_NAME="us-west-2"

LOG_LEVEL="INFO"

AWS_SNS_TOPIC_NAME="dronze-qlearn-cf"

MESSAGE_LOOP_WAIT_SECS=3

POST_MESSAGE_ENDPOINT="http://ec2-35-167-29-231.us-west-2.compute.amazonaws.com:5020/webhook/notification/T1BGUBKQR/aws-sns/dronze-qlearn-cf"The Application User IAM Roles

The user that you used for the application configuration needs to be able to listen to topics, create queues, send notifications, and get messages. I just set up full access, but you could probably tighten the policy quite a bit:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"sqs:*","sns:*"

],

"Effect": "Allow",

"Resource": "*"

}

]

}Installing the Virtual Environment

A virtual environment installer is provided called install.sh.

$ bash ./install.sh

$ source ../ve-sns-webhook/bin/activateRunning The Application Locally

$ python application.py — config ~/config/local/snswebhook.properties

starting.

topic_name:dronze-qlearn-cf

INFO 2017–03–29 15:29:50,529 _new_conn 735 : Starting new HTTPS connection (1): sns.us-west-2.amazonaws.com

INFO 2017–03–29 15:29:50,724 _new_conn 735 : Starting new HTTPS connection (1): us-west-2.queue.amazonaws.comPulling and Running The Docker Container From DockerHub

Well we went and did it, we made it a docker container. This container doesn’t need to run in AWS, it can run anywhere on the internet because it uses the Boto3 client to make the calls.

Login to the host machine and pull the container:

$ docker pull claytantor/sns-webhook:latestYou will need to put the snswebhook.properties file you made (above) and tested with on the host machine in the ${CONFIG_DIR} that the docker container will mount, it expects that file to be there.

Just run it!

$ export CONFIG_DIR=/home/ubuntu/config

$ docker run -t -d --name snswebhook -v ${CONFIG_DIR}:/mnt/config claytantor/sns-webhook:latestTesting It

In the SNS console in AWS you can send messages:

Once you send a message you should see a message in the logs:

$ docker logs snswebhookINFO 2017-03-29 19:30:40,803 main 211 : messages received: 1

INFO 2017-03-29 19:30:40,803 post_message 157 : sending message: {"body": "This is an awesome test!", "queue_arn": "arn:aws:sqs:us-west-2:705212546939:dronze-qlearn-cf-q", "queue_url": "https://us-west-2.queue.amazonaws.com/705212546939/dronze-qlearn-cf-q", "topic_arn": "arn:aws:sns:us-west-2:705212546939:dronze-qlearn-cf"}

INFO 2017-03-29 19:30:40,892 post_message 163 : response: {"notifier_name": "dronze-qlearn-cf", "message": "succeed", "event_type": "aws-sns", "team": "T1BGUBKQR"}

INFO 2017-03-29 19:30:40,892 main 217 : message sent.

Thats it! You have a SNS webhook syndication service. Enjoy.

Credits: This story is heavily influenced by Eric Hammond’s SNS and SQS article on his blog Alestic.